Blood Suction Using Reinforcement Learning

.gif)

Project Date: July, 2023

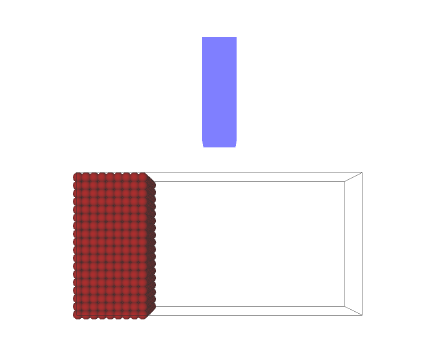

The Blood Suction Simulator is designed to model the dynamics of blood particles during a surgical suction process. The primary objective of this simulator is not to create a visually realistic portrayal of blood flow but to accurately represent the physical behavior of blood under suction. The RL model was trained using Soft Actor-Critic (SAC) algorithm, giving input the blood region as an image mask.

Particles are updated using Verlet integration, which provides stability and accuracy in the simulation of motion. Each particle's state is updated based on its interactions with other particles and the environment. The system calculates forces resulting from collisions between particles and with walls, ensuring realistic particle behavior without overlapping or unrealistic merging. The suction tool's influence is modeled through a force that decays with distance from the tool, applied in the direction of the tool's position. This simulates the decrease in suction effect as distance increases.

Soft Actor-Critic (SAC) can be effectively used to train the agent for the blood suction task, using its ability to handle continuous action spaces and optimize complex reward structures. In SAC, the agent learns a policy \( \pi(a|s) \) that outputs actions (e.g., suction parameters) given the state (image mask of blood regions). The algorithm optimizes a stochastic policy by maximizing the soft value function:

\[ J(\pi) = \mathbb{E}_{s_t, a_t \sim \pi} \left[ Q(s_t, a_t) - \alpha \log \pi(a_t|s_t) \right], \]

where \( Q(s_t, a_t) \) estimates the expected return, and \( \alpha \) controls the trade-off between exploration (high entropy) and exploitation (low entropy). SAC uses two neural networks: a critic network to approximate \( Q(s, a) \) using the Bellman equation and an actor network to model \( \pi(a|s) \). The image mask is processed via convolutional layers, extracting features fed into these networks. During training, the agent observes the current image mask, selects actions, and receives a reward based on the reduction in blood regions. This reward encourages the agent to optimize suction actions while ensuring robust exploration through the entropy term, making SAC suitable for dynamic and uncertain environments like your simulator.

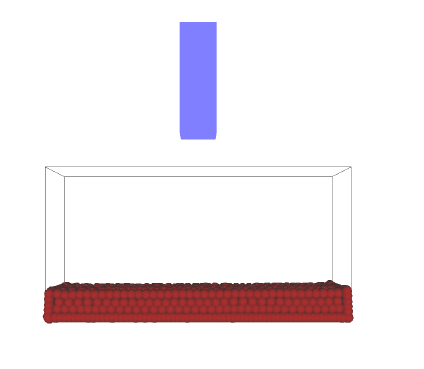

The agent efficiently controled the suction tool, minimizing blood regions in the image mask while dynamically adapting suction intensity, position, and duration based on the blood distribution. The learned policy prioritized dense regions initially and fine-tune actions to clear residual spots, achieving high removal efficiency across various scenarios. Results included significant reductions in residual blood (83), faster and more precise operations compared to heuristic methods, and robust adaptability to diverse environments.

Technologies Used

- C++ library for Verlet integration

- NVIDIA RTX GPU

- My brain

Project Gallery